1. What is MQC and how can it help you?¶

1.1. What is MQC?¶

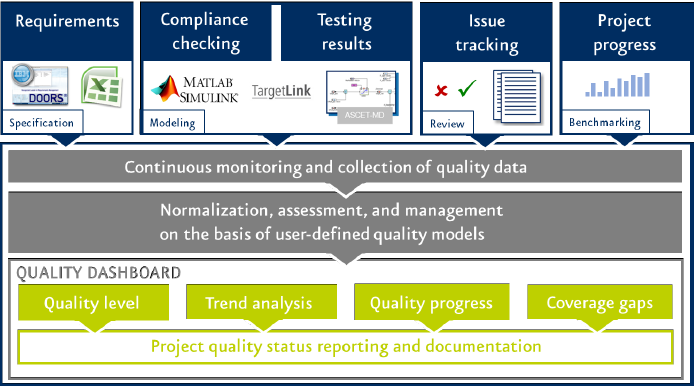

Figure 1.1 Quality assurance and monitoring with MQC¶

MES Quality Commander® (MQC) is a dynamic quality monitoring and management tool for software development that captures all the decision-making data that you need throughout the software life cycle. MQC computes and evaluates the quality and product viability of your software, based on relevant development artifacts and the corresponding key performance indicators (e.g. guidelines, complexity, tests, coverage and reviews). User-friendly visualizations of product maturity, weaknesses and need for action during the different stages of a project increase the software’s development and product value.

MQC also optimizes return on investment by perpetual availability of trend analysis that indicates the product’s achievable level of quality. An efficient visualization of quality and progress for different development projects ensures error proofing very early. Project-specific evaluation with individually configurable quality models adaptable to ISO 26262 or ASPICE enables quality assurance of safety relevant software development.

MQC provides different possibilities of reporting such as the desktop client itself or the web viewer for sharing information. Thus, effort and changes can be controlled and minimized. The web viewer guarantees multiple users to be able to access your project and supplies interactive reporting along with other features. Therefore, data discovery and operational reporting yield an entire understanding of the data’s quality impact. MQC data import supports several operational tools and export formats, which allows a fast and easy setup of quality monitoring projects. Data collection can also be automated and integrated into continuous integration to make full use of your existing infrastructure and workflows.

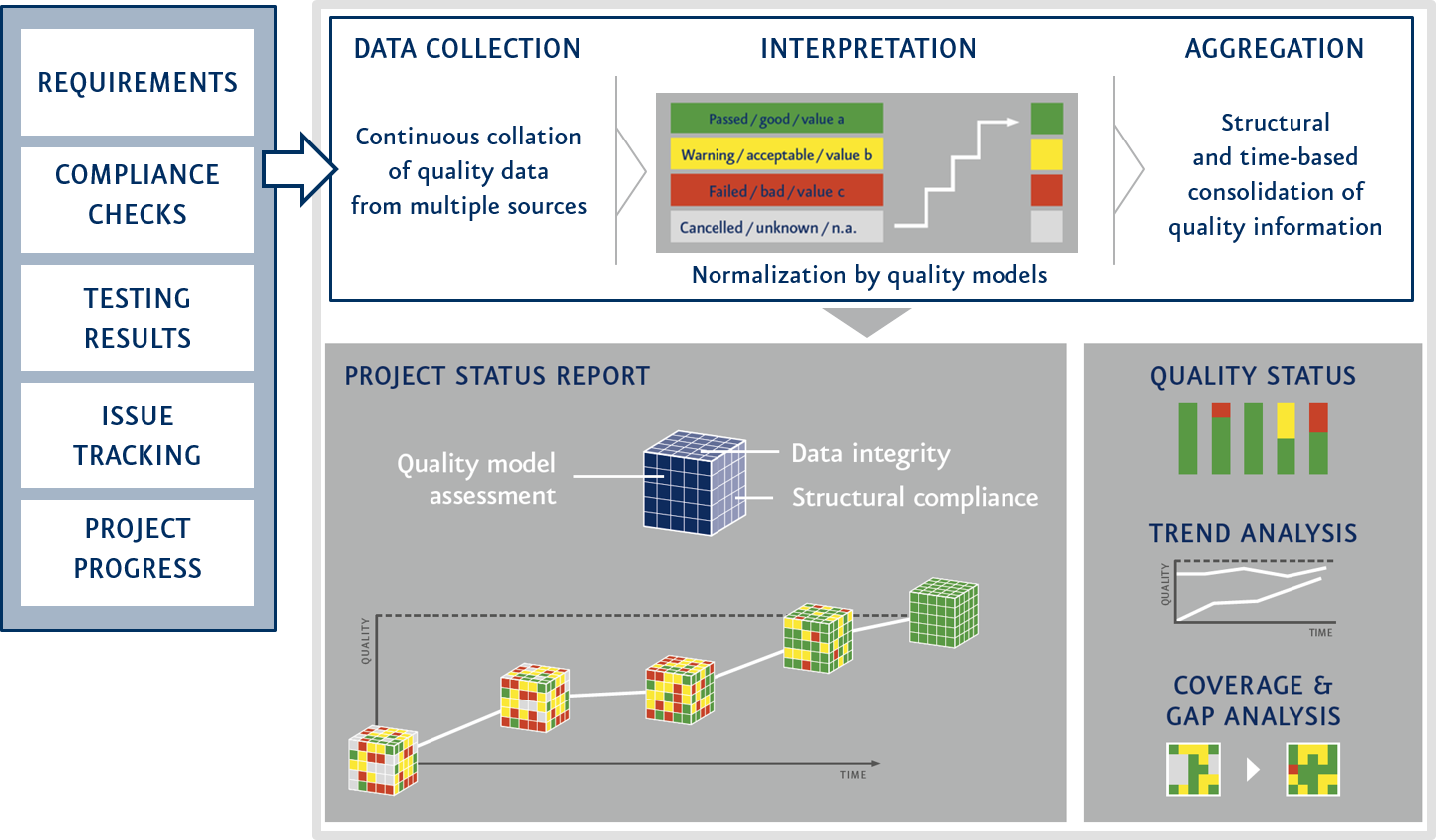

Figure 1.2 Data to quality: MQC’s representation of the Software life cycle¶

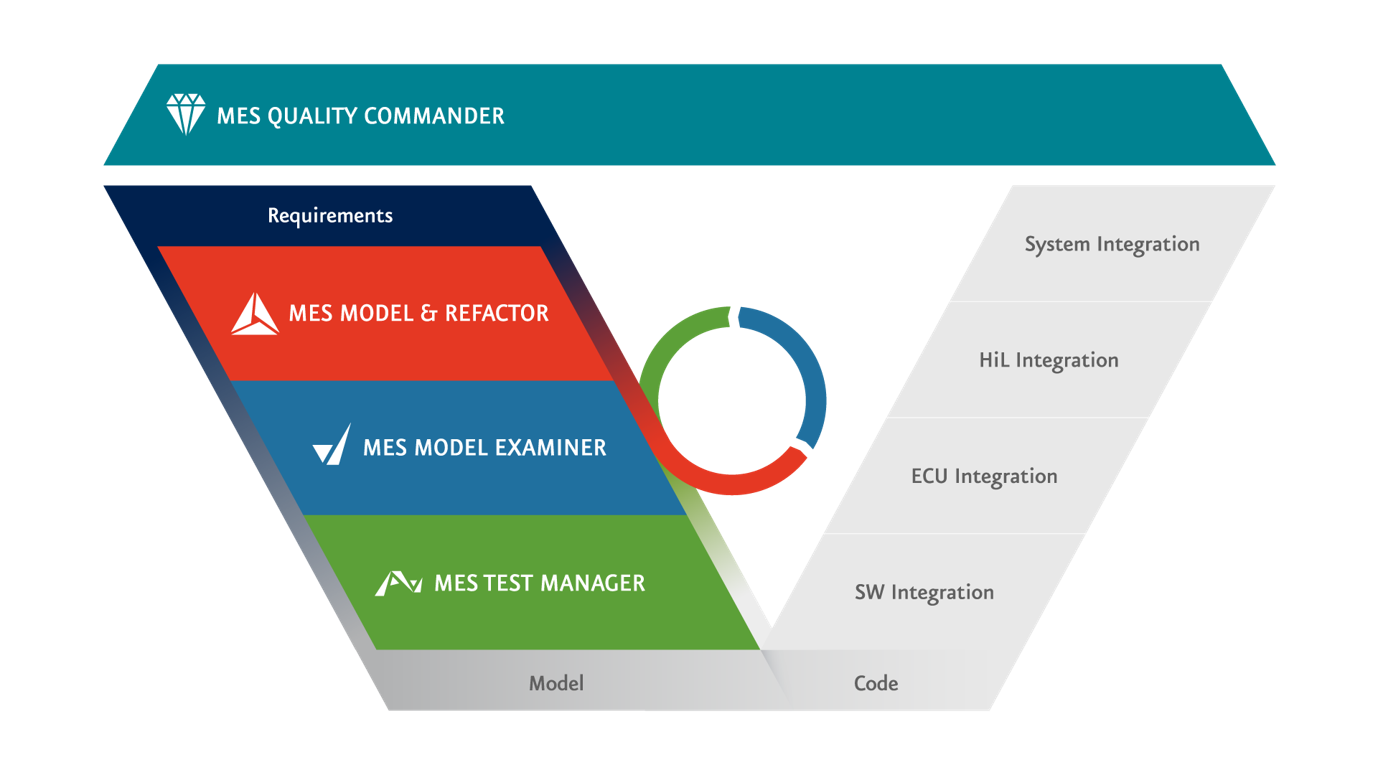

Referring to the V-Model, MQC aims to be the Master tool of monitoring and managing your (model-based) Software Development Lifecycle (SDLC). As shown in Figure 1.3, MQC can collect data from various quality assurance activities at each stage of the SDLC. MQC not only provides efficient extraction of data from MES tools, but also from other tools like TPT, Tessy, Polyspace and Embedded Tester.

Figure 1.3 MQC evaluates data from a wide range of report-generating tools.¶

1.2. From data to quality¶

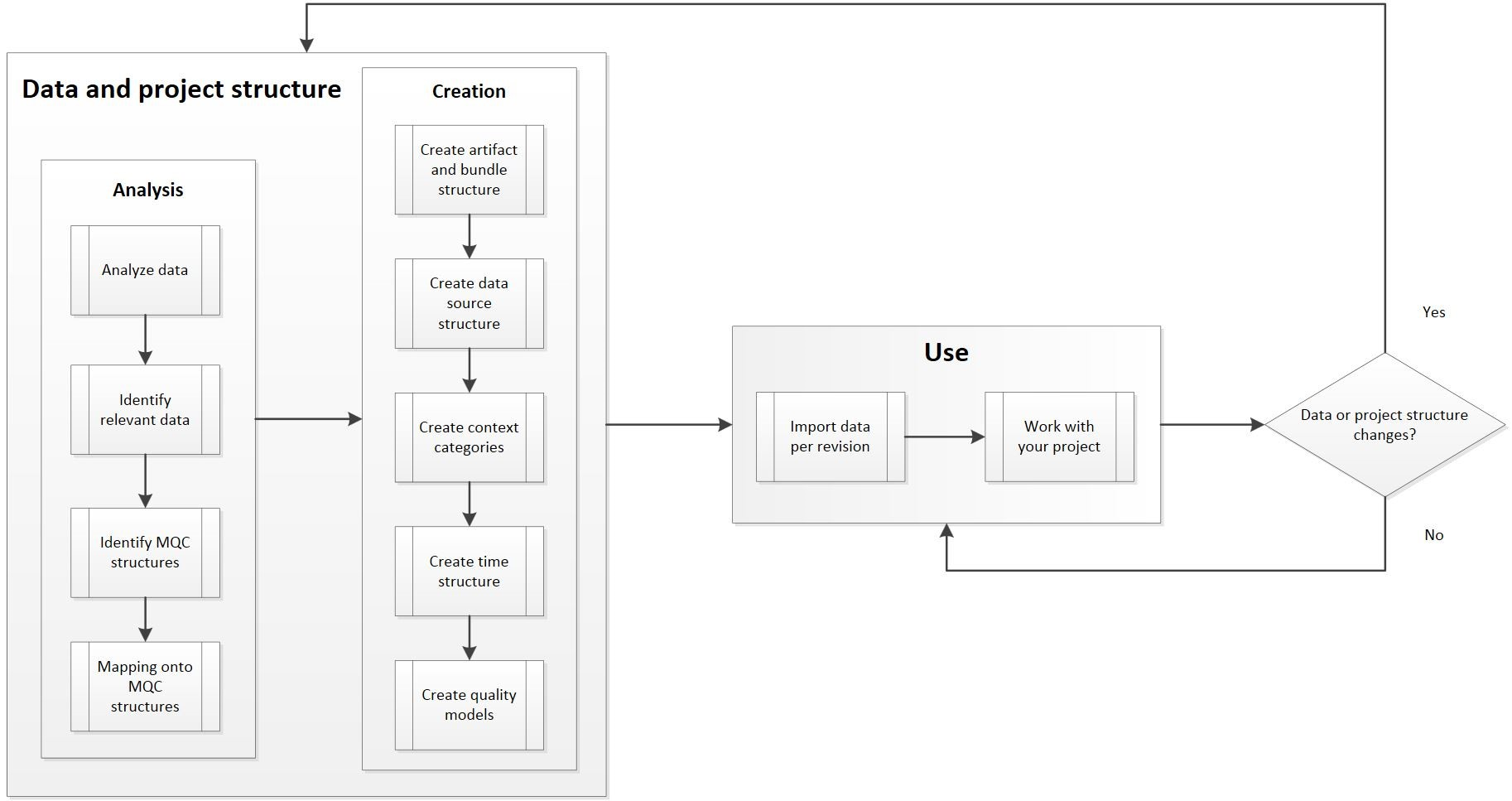

The project creation workflow can be separated into two parts.

The first and most important part to create an MQC project is “Data and project structure”. It contains two processes: “Analysis” and “Creation”. During the “Analysis” process, one has to analyze the available data with respect to the data’s relevance of quality. In particular, the “7Ws” of data analysis (who, where, when, what, why, how and how many) are fruitful to answer to understand the data’s structure. For more details, we refer the user to read the book “Agile Data Warehouse Design” (Lawrence Corr with Jim Stagnitto, 2014, pp. 31f).

After having answered these questions, you should have identified relevant data. The next crucial step is the identification of MQC structures in your data. Such structures can be artifacts and hierarchies, data sources and data values, projects and milestones and much more.

After identifying the structures in your data, they need to be mapped onto the MQC structures. Particularly, this is a very abstract step, because it is a priori vague what “mapping” means in detail. Typically, MES quality and data experts, together with customer process experts, analyze the customer process and provide professional support to deduce MQC structures. All these steps form the foundation of the second process – “Creation”.

Figure 1.4 MQC Project creation workflow¶

First, note that the order of the sub-processes of “Creation” as depicted in Figure 1.4 may be adapted, but we recommend the given sequence. Since “Artifacts” are those objects for which data arises and for which MQC does quality computation, it is a good idea to start with structuring these objects first. This structuring is an outcome of the “Analysis” process. Secondly, you should define the data source structure, which follows directly from the first process, too. As data sources consider the objects that yield data for the Artifacts, it is natural to create them after creating the Artifact structure.

The next step is creating context categories. By a context category, one is able to connect Artifacts and Data Sources. Vividly spoken, a context category provides information about which data is expected for the Artifacts.

The definition of the project’s time structure (so-called Revisions) is crucial to provide a chronological sequence to the changes of data.

The second part of the project creation workflow, “Use”, is the application of the Data and project structure creation. It consists of (possibly automated) recurring data import, which is the basis for visualizations in MQC. After having imported data, you can work with the visualizations and perform data discovery. If the data or project structure changes, you have to adapt them before importing new data. This entire part deals with quality computation and is a direct result of the previous part. The visualizations appear automatically.

1.3. How MQC supports quality assurance and quality improvement¶

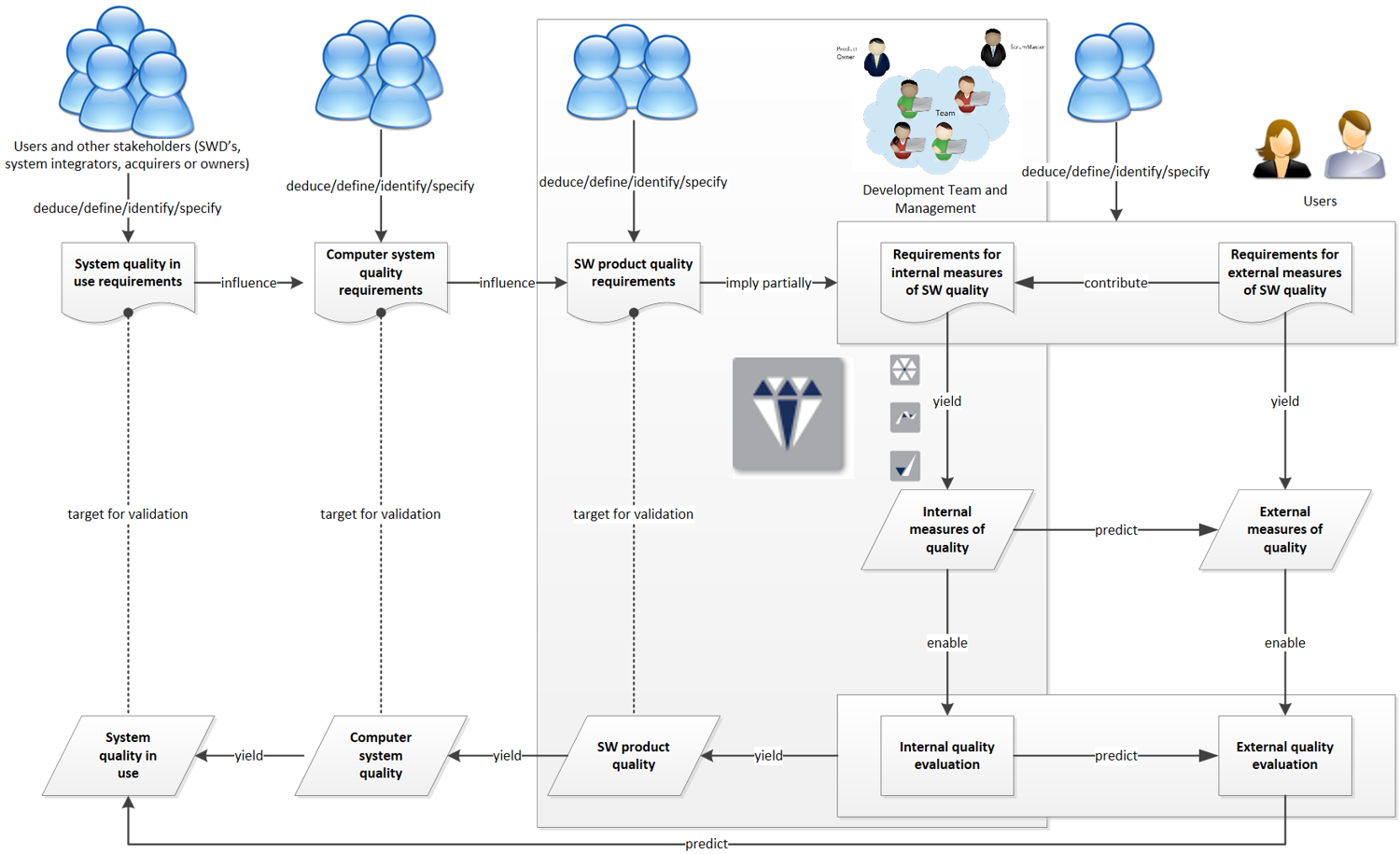

The following illustration provides a coherence of high-level requirements, quality computation and aggregation, as well as (product) quality.

Figure 1.5 MQC Quality Life Cycle Model¶

The grey box in the middle of Figure 1.5 symbolizes that the main purpose of MQC is to increase the (software) product quality.